“I’m Increasingly Convinced Nobody Here Actually Understands What AI Is” – A Practical Guide for The One Person Who Does

You’re in yet another meeting. Someone says, “Let’s just have AI do it,” and the room nods like that solves everything. You, meanwhile, are mentally listing hallucinations, bias, missing data, and who gets blamed when this thing goes sideways.

This guide is for the technical power user or AI‑savvy knowledge worker who feels like the only adult in the room whenever “AI strategy” comes up. By the end, you’ll have language, frameworks, and conversation patterns to turn vague AI enthusiasm into realistic, safe, and genuinely useful deployments.

The Problem – When Everyone Loves “AI” But Nobody Understands It

Here’s the core misalignment: most people around you treat AI like a magical, infallible decision-maker instead of a probabilistic pattern‑matching system that can be confidently wrong. They assume “AI” will figure it out if you just point it at a problem, skip the boring data work, and automate human judgment out of the loop.

You see the fallout: projects scoped as if models never hallucinate, “AI will replace X team” narratives that ignore edge cases, and workflows that quietly bypass human review in high‑stakes decisions. It leaves you feeling isolated, overruled, and weirdly responsible for failures you warned about but couldn’t articulate in a way non‑experts would accept.

Agitation – The Hidden Risks of Misunderstanding What AI Actually Is

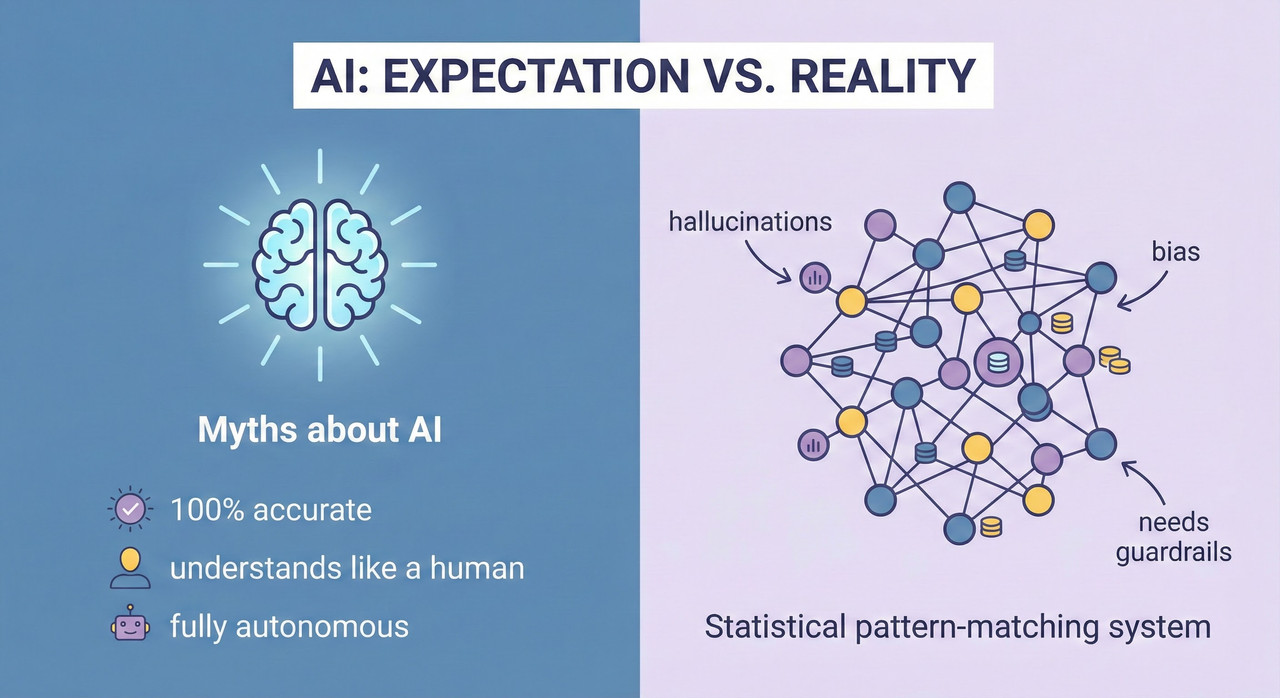

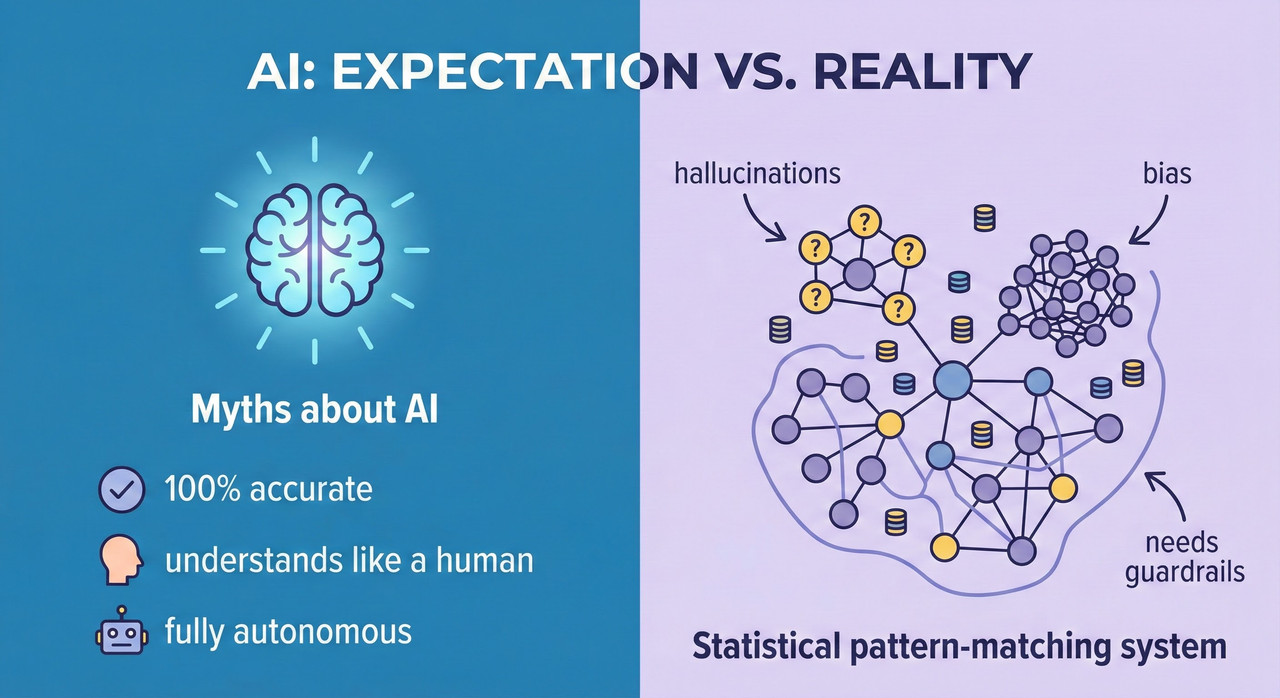

Underestimating what AI is (and isn’t) doesn’t just create awkward meetings; it creates real organizational risk. Common misconceptions look like:

- “AI is 100% accurate and keeps getting smarter, so it’ll surpass our experts soon.”

- “AI understands like a person and can ‘reason through’ gaps in data.”

- “If we just feed it enough data, it will figure out the right answer.”

- “We can replace expert reviewers because AI won’t miss the obvious stuff.”

The consequences are brutal: hallucinated outputs trusted as fact, biased decisions scaled across thousands of users, brittle automations that quietly fail, and pilots that never graduate from demo to production. Add regulatory and reputational risk when wrong outputs hit customers, regulators, or the press, and you get the AI‑fatigue pattern many companies are already reporting.

Under the hood, these problems map to known failure modes: hallucinations (confident nonsense), bias from skewed or incomplete data, misinterpretation of ambiguous prompts, and models that struggle to catch their own mistakes in the same context. High AI project failure rates are often driven less by weak models and more by leaders misunderstanding the technology, the data, and the workflows needed to make it safe and reliable.

Agitation – Why Explaining “LLMs Are Just Next-Token Predictors” Hasn’t Worked

You’ve probably tried the line: “Look, LLMs are just next‑token predictors, basically fancy autocomplete.” Technically true, but it rarely changes behavior. People nod along when you say “AI can hallucinate” and then design workflows that assume outputs are correct by default.

There’s a gap between conceptual understanding and operational behavior: stakeholders accept that AI “can be wrong,” but still ship systems that treat it like a deterministic rules engine. Cognitive biases do a lot of damage here: tech‑savior narratives, dashboard theater (slick demos over robust systems), and an obsession with shipping impressive AI features fast rather than safely.

That leaves you in a familiar position: every time you bring up guardrails, uncertainty, or human review, you risk being labeled “negative” or “anti‑innovation” even though you’re the one actually trying to make AI useful and sustainable. Without a better framing, you end up either arguing endlessly or going quiet and watching projects fail in slow motion.

Solution – Reframing AI in Plain Language That Non-Experts Will Actually Hear

The goal is not to turn everyone into ML researchers; it’s to give them a mental model that is simple, sticky, and operationally useful. A working, “kitchen‑table” definition you can use:

For leadership, an elevator script like this lands better than a lecture on architectures:

Position AI as assistive: think “very fast, somewhat flaky intern” rather than “all‑knowing expert.” That metaphor naturally implies supervision, feedback, and scope boundaries, all of which you need to reduce risk.

Solution – Mapping Misconceptions to Concrete Failure Modes

Right now, your pushback probably sounds emotional: “I’m worried this is risky.” To change the conversation, you want to map each misconception to a specific, nameable failure mode and example.

Here’s the kind of mapping table to surface:

- “AI never makes mistakes” → hallucinations and overconfident nonsense, e.g., a model inventing legal cases or medical citations that don’t exist.

- “AI understands like a human” → lack of true world model, leading to answers that sound right but violate real‑world constraints or safety rules.

- “More data will fix it” → data‑quality issues and exposure bias that still cause cascade failures when early tokens go wrong.

- “We don’t need humans in the loop” → unchecked propagation of biases or hallucinations into customer‑facing decisions.

Once you name the pattern (“this use case is vulnerable to hallucinations plus overconfidence”), you can ask more precise questions like, “Which failure modes are acceptable here, and how are we mitigating them?” instead of, “Is this safe?”

Solution – A Simple Guardrail Framework You Can Advocate For

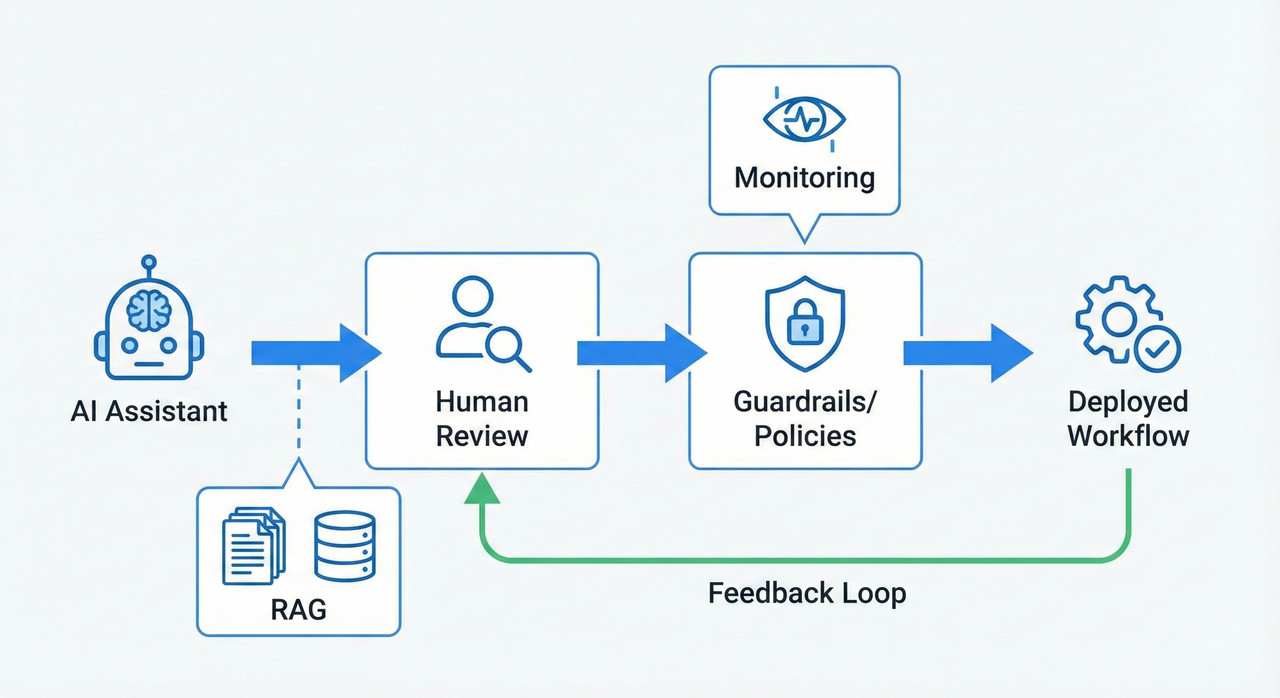

You don’t have to be “Head of AI” to influence how AI is deployed. A simple three‑pillar guardrail framework is often enough to reorient the conversation.

- Data and context

Use retrieval‑augmented generation (RAG) so models ground their answers in verified, domain‑specific sources instead of guessing from training noise. Maintain a curated knowledge base or ground‑truth dataset, and be explicit about what the model doesn’t know (time cut‑offs, missing domains, etc.). - Human‑in‑the‑loop

Define where human review is mandatory: medical, legal, financial, HR, or safety‑critical use cases should never rely on unreviewed AI outputs. Assign clear ownership: who signs off before outputs affect customers, operations, or compliance. - Monitoring and feedback

Log hallucinations, biased outputs, and failure cases rather than treating them as one‑off glitches. Use this log to improve prompts, policies, RAG sources, or even tool selection over time.

This framework directly addresses the myths you identified: guardrails don’t kill innovation; they make it possible for AI projects to survive contact with reality.

Solution – Conversation Patterns for Pushing Back Without Being “The AI Police”

You don’t win these battles by dumping PDFs; you win them with better questions in the room. A few conversation patterns you can keep in your back pocket:

- To challenge magical thinking:

“Where would we be comfortable if this system is confidently wrong?” - To insert human review:

“Who signs off on this output before it affects customers or compliance?” - To set boundaries:

“This use case needs citations and an abstain option; otherwise, we’re encoding hallucinations as policy.”

Framing your concerns as risk management and value protection helps you avoid being painted as anti‑AI. You’re not blocking progress; you’re preventing the team from shipping a demo that becomes a liability instead of an asset.

Solution – Designing AI-First Workflows That Actually Leverage Its Strengths

To move beyond “no,” you need to offer better “yeses.” Generative AI shines in workflows where outputs are easy to verify, low‑stakes, or primarily assistive.

Good fit examples:

- Support email triage: AI drafts classification and suggested responses, agents review and edit, and the system learns from which suggestions get accepted.

- Marketing drafts: AI generates first‑pass copy from structured briefs, humans refine, brand/legal review before publishing.

- Internal policy Q&A with RAG: Employees ask questions, AI responds only using retrieved, version‑controlled policy documents with inline citations.

In contrast, AI should assist rather than decide in medical, legal, financial, HR, and other safety‑critical or reputationally sensitive domains. The pattern you are advocating is consistent: AI drafts; humans decide.

When To Walk Away – Recognizing Unfixable AI Misalignment

Not every environment is fixable, and part of protecting your career is recognizing that early. Red flags include:

- Leadership ignoring documented failure cases or external evidence about AI risks.

- Refusing basic guardrails (“human review would slow us down,” “we don’t need logs”).

- Punishing people who question unreviewed AI outputs or who refuse to sign off on them.

In those contexts, document your concerns and the decisions made anyway, so there’s a paper trail showing you flagged the risks in good faith. Then make a sober call: do you stay and limit your exposure, or start planning a move to a team that treats AI as a serious systems problem rather than a toy demo?

Putting It All Together – From “No One Gets AI” to Being the Quiet Architect

You’re not crazy for feeling like nobody around you truly understands what AI is; the misunderstanding is widespread, even in senior leadership. But that also means your literacy is an asset—if you can translate it into frameworks and conversations people can use.

Start with one live AI initiative at work: map the misconceptions, identify the likely failure modes, and propose a lightweight guardrail framework plus one or two better‑fit workflows. Keep a simple “AI risks and wins” log—where guardrails prevented damage or helped projects succeed—and over time, you stop being “the skeptic” and become the quiet architect people rely on when AI really matters.

Leave a Reply