Transparency Note: This article may contain affiliate links. If you make a purchase through these links, we may earn a small commission at no extra cost to you.

How Much Water Does AI Use Per Prompt? The Real Numbers (2025 Update)

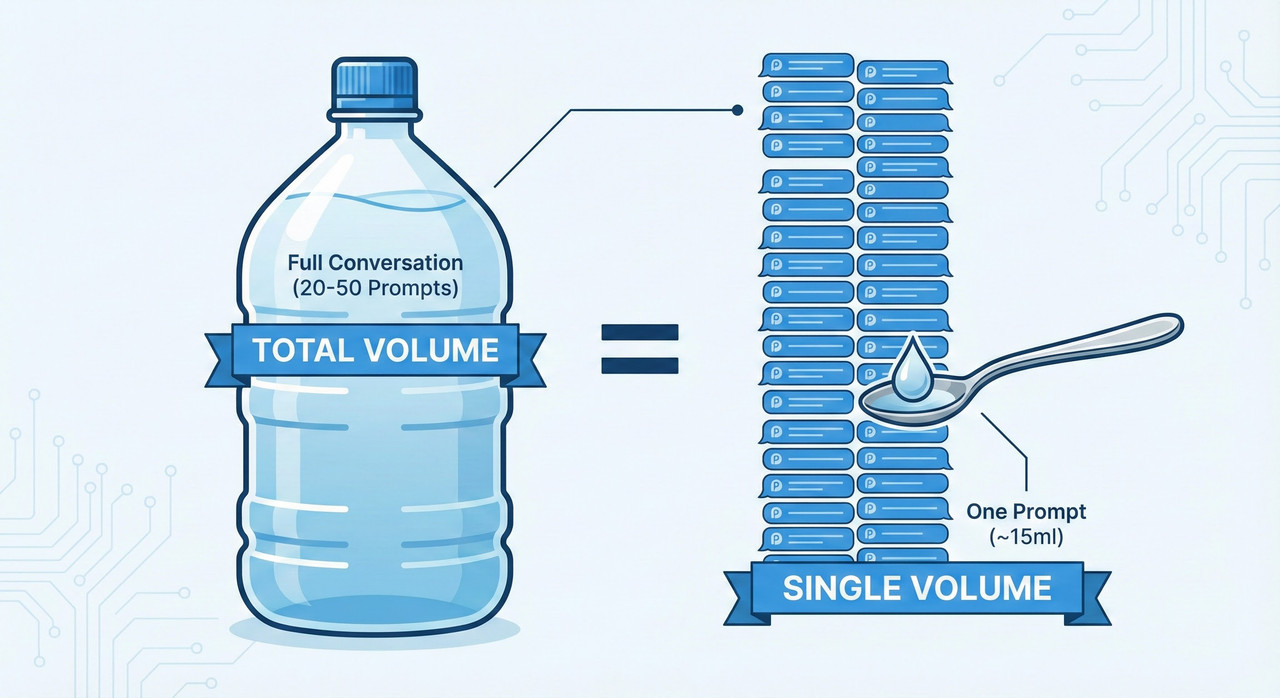

You’ve probably seen the headline that sent shockwaves through the eco-conscious tech community: “ChatGPT drinks a bottle of water for every conversation.”

It’s a startling image—a plastic bottle drained every time you ask for a cookie recipe. But in the world of data centers and large language models (LLMs), headlines often lack nuance. While the environmental impact of AI is real and significant, the “per prompt” reality is a bit more complex, and thankfully, often less severe than the panic suggests.

So, what is the Bleeding Neck answer? If you need a specific number right now for your research or peace of mind, here is the technical reality:

A standard query on a model like GPT-4 consumes approximately 10ml to 25ml of water. That is roughly 2 to 5 teaspoons.

However, the landscape is shifting rapidly. Newer, optimized models released in late 2024 and 2025, such as Google’s Gemini or OpenAI’s “mini” variants, have driven this number down significantly—to as little as 0.26ml (about 5 drops) per query.

The Math: Breaking Down the “Water Bottle” Myth

To understand where these numbers come from, we have to look at the source that started the conversation. The widely cited figure comes from a 2023 study by the University of California, Riverside (UCR).

Researchers estimated that a 500ml bottle of water is consumed for every 20 to 50 questions asked. The confusion in the media arose when headlines conflated a “conversation” (which they defined as 20-50 turns) with a single “prompt.”

Here is the simple math based on that study:

- 500ml (Total Water) ÷ 50 Prompts = 10ml per prompt.

- 500ml (Total Water) ÷ 20 Prompts = 25ml per prompt.

The 2025 Efficiency Shift

There is a massive “Competitor Gap” in the information you see on the first page of Google: most articles are still citing 2023 data. In the world of AI, two years is a century.

As of 2025, models have become drastically more efficient per “token” (the basic unit of text AI processes). Tech giants have optimized their hardware and software to reduce the energy required for “Inference” (the act of answering you). This means that while the aggregate water usage of AI companies is rising due to volume, the water cost per individual interaction is dropping.

Why Does Software Need Water? (The Mechanics)

It feels counterintuitive. Software is code; code is virtual. Why does it need physical water? The answer lies in Thermodynamics.

1. Direct Water (The Thirsty Servers)

Data centers are essentially giant buildings filled with thousands of computers (servers) running 24/7. These servers generate immense heat. If they get too hot, they crash. To prevent this, data centers use cooling towers that rely on evaporative cooling.

Think of it like human sweat. When water evaporates, it absorbs heat from the environment. Data centers spray water into cooling towers to chill the air that circulates around the servers. This is where the majority of the “consumed” water goes—it evaporates into the atmosphere.

2. Indirect Water (The Power Plant)

The second factor is electricity. The servers need power to run. If that electricity comes from a hydroelectric dam (which loses water to evaporation from reservoirs) or a thermoelectric plant (coal, nuclear, gas) that uses steam turbines, water is consumed in the generation of the electricity before it even reaches the data center.

Training vs. Inference

It is also vital to distinguish between the two phases of AI life:

- The Training Lake: This is the millions of liters used once to teach the model everything it knows.

- The Inference Cup: This is the ongoing, daily water usage required to answer your specific question.

The “Location Lottery”: Why Where Matters More Than What

Here is the hidden variable that most users overlook: Location matters more than your prompt length.

The water footprint of your ChatGPT or Gemini query is heavily dependent on where that specific request is processed. Data centers have a metric called WUE (Water Usage Effectiveness).

- Hot Climates (e.g., Arizona, Texas): In hot, dry climates, cooling towers have to work overtime. A query processed here consumes significantly more water.

- Cool Climates (e.g., Sweden, Ireland): In cooler regions, data centers can use “free cooling” (simply opening the windows to let outside air in), which uses almost zero water.

Unfortunately, as a user, you cannot currently select which data center processes your request. You are entered into a “Location Lottery” based on server traffic and your own geography.

How to Lower Your AI Water Footprint

If you are struggling with “Eco-guilt,” you don’t need to stop using AI. You just need to use it smarter. Here are three actionable ways to minimize your impact:

Eco-Friendly AI Tips

- Batch Your Queries: Every time you send a prompt, the AI has to re-process the “context” of the conversation. Instead of asking five separate short questions, combine them into one detailed prompt. This is more efficient for the hardware.

- Use “Mini” Models: Do you need a genius-level PhD model to summarize an email or write a thank-you note? Probably not. Switch to GPT-4o-mini or Gemini Flash. These smaller models require a fraction of the computing power (and water) of their larger counterparts.

- Avoid Redundancy: We’ve all done it—regenerating an answer just to see if the second version is slightly better. Try to accept the first answer if it meets your needs.

Final Verdict: Should You Feel Guilty?

Let’s put that 10ml to 25ml in perspective. That is less than a single sip of your morning coffee. In fact, the water footprint of growing the beans for that one cup of coffee (approx. 140 liters) is vastly higher than asking an AI to write a poem about it.

While the aggregate global impact of AI water usage is a massive challenge that tech giants must solve (through water recycling and better cooling), your individual impact as a user is manageable.

Leave a Reply