Transparency Note: This article may contain affiliate links. If you choose to purchase through these links, we may receive a small commission at no extra cost to you.

Can AI Chatbots Make Mistakes? How to Spot, Explain, and Fix Wrong Answers

You’ve likely been there: You’re in the middle of a high-stakes project, leaning on your favorite AI tool to speed things up. You ask for a specific legal precedent, a medical statistic, or a summary of a recent tech merger. The AI responds instantly with perfect grammar and total confidence. You copy, paste, and move on—only to discover later that the statistic was inflated, the legal case doesn’t exist, and the “merger” was actually a rumor from a 2019 Reddit thread.

[TOC]It feels like being gaslit by a machine. So, can ai chatbots make mistakes, or is the technology just not ready for prime time? The truth is that while AI is a productivity powerhouse, it is also a “hallucination machine” by design. Understanding why these errors happen is the difference between an AI power user and someone who gets burned by a bot.

The Problem: Confident AI, Embarrassing Errors

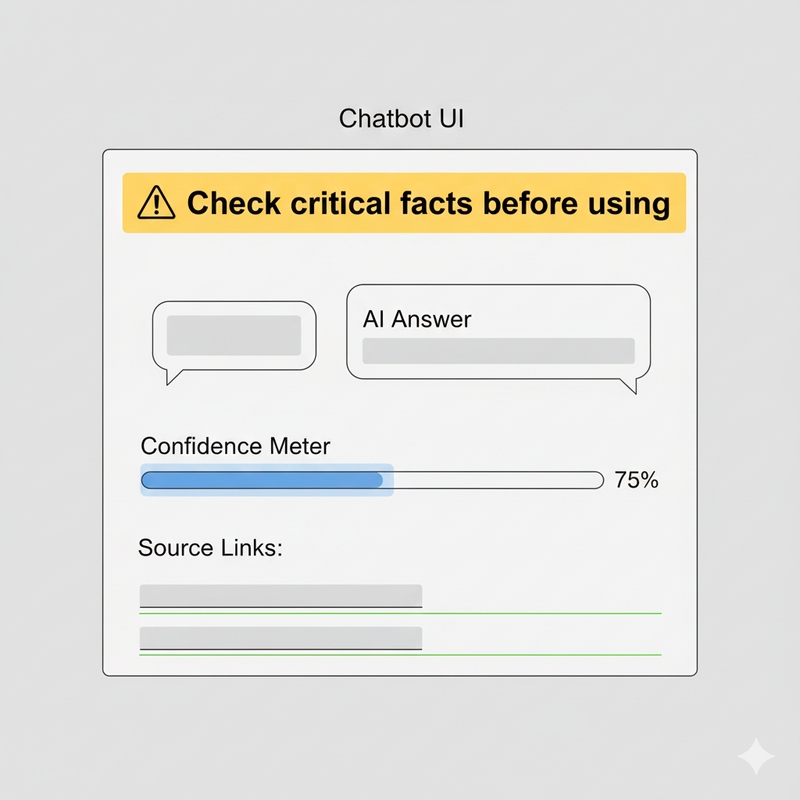

The most dangerous thing about a chatbot isn’t that it’s wrong—it’s that it sounds so right. Most human experts will hesitate, use words like “maybe,” or admit when they are out of their depth. AI chatbots, however, are optimized for fluency. They are built to provide a satisfying, coherent answer, even if they have to invent facts to do it.

For professionals and students, this creates a massive “Trust Gap.” If you send an AI-generated report to a client that contains a fabricated URL or a logical contradiction, the damage to your reputation is immediate. The core pain is that these errors are often invisible at first glance because the grammar is flawless.

Real-World Examples of AI Getting It Wrong

- Invented Citations: Asking for “academic papers on X” often results in real-sounding titles written by real-sounding authors that simply do not exist.

- Mathematical Logic: Asking a chatbot to solve a word problem sometimes leads to “correct-looking” steps that result in an impossible final number.

- The Knowledge Cutoff: Since many AI models are trained on snapshots of the internet, they often guess what happened *after* their training ended, leading to incorrect summaries of current events.

Why AI Chatbots Make Mistakes (Without “Thinking”)

To fix the problem, you have to understand that the chatbot isn’t “thinking” like you do. It is a highly advanced version of the “Autofill” feature on your phone. It doesn’t look up a database of facts; it predicts the most statistically likely next word in a sentence.

Because it operates on probability rather than “truth,” it prioritize a plausible-sounding sentence over an accurate one. If its training data—the billions of pages of text it read—contains biases, outdated info, or flat-out lies, the AI will repeat them with the same confidence it uses for the alphabet.

The Four Main Error Types Users See

- Factual Errors: “The capital of Australia is Sydney.” (It’s Canberra).

- Logical Errors: “If 3 shirts take 3 hours to dry, 30 shirts take 30 hours.” (Linear logic fails in the real world).

- Hallucinations: Creating a fake law or a fake website URL that looks perfectly formatted.

- Context/Tone Errors: Giving a lighthearted, jokey answer to a prompt about a sensitive HR issue.

Why Chatbots Hallucinate Instead of Saying “I Don’t Know”

In early testing, developers found that users were frustrated when AI frequently said “I don’t know.” As a result, many models were “rewarded” during training for providing helpful, complete answers. This created a side effect: the AI would rather guess (hallucinate) than admit it is stumped, especially if the user’s prompt is vague.

High-Risk Situations Where Mistakes Are More Dangerous

While a mistake in a creative poem is harmless, errors in “Your Money or Your Life” (YMYL) categories can be devastating. You should be on high alert when using AI for:

- Medical Advice: Dosages or symptoms.

- Legal Research: Case law or filing requirements.

- Financial Planning: Tax codes or investment math.

- Breaking News: Real-time events occurring right now.

Troubleshooting When Your Chatbot Answer Looks Wrong

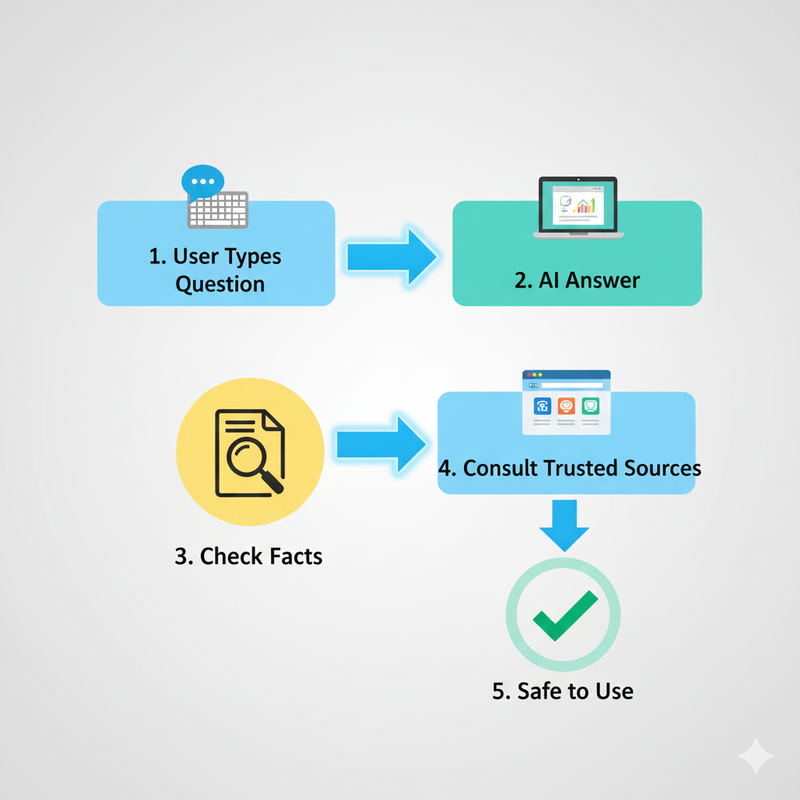

When you suspect an answer is off, don’t just give up. Use this “debugging” workflow to steer the AI back to reality.

Step 1: Rewrite Your Question (Provide a “Fair Chance”)

Vague prompts lead to vague (and wrong) answers.

- Bad: “Tell me about the history of the company.”

- Good: “Summarize the history of [Company Name] between 2010 and 2022, focusing specifically on their acquisitions. Only use verified public records.”

Step 2: Ask for Reasoning, Not Just Results

Force the AI to “think out loud” by using Chain-of-Thought prompting. Ask: “Explain your reasoning step-by-step before giving me the final answer.” This often exposes a logical flaw in the middle of the process that the AI can then self-correct.

Step 3: Verify Critical Facts

Treat AI like an eager but unreliable intern. If it gives you a citation, search for that citation in a separate tab. If it gives you a phone number or a specific date, verify it via an official source. Never use an AI-generated URL without clicking it first.

Safer Workflows for Using AI Chatbots Day to Day

To gain the productivity benefits of AI without getting burned, adopt the “Human-in-the-Loop” model. The AI handles the “heavy lifting” (drafting, formatting, summarizing), and you handle the “high-level” tasks (fact-checking, tone-polishing, and final approval).

A Simple Safety Checklist for Non-Technical Users

- Label it: Clearly mark AI-generated drafts so others know they need review.

- The 3-Fact Rule: Pick the three most important facts in the output and verify them elsewhere.

- Constraint Prompting: Tell the AI, “If you are unsure of a fact, tell me you don’t know rather than guessing.”

Putting It All Together: Using Imperfect AI

So, can ai chatbots make mistakes? Absolutely. But a hammer can also slip and hit your thumb if you don’t know how to grip it.

The goal isn’t to find a “perfect” AI that never lies—that doesn’t exist yet. The goal is to become a savvy operator who knows when to trust the bot and when to pull out the magnifying glass. Treat AI as a fast assistant, not an infallible oracle, and you’ll find that even an imperfect tool can still change the way you work for the better.

Leave a Reply