Transparency Note: This article may contain affiliate links. If you purchase through these links, we may earn a small commission at no extra cost to you.

How Many Parameters Does GPT-5 Really Have? A Practical Guide for Engineers

If you’ve opened three tabs and found three different parameter counts for GPT-5, you’re not alone—and there’s a good reason nobody can give you a single “correct” number. If you are a developer or a technical lead trying to figure out how many parameters does gpt-5 have to benchmark your next project, the lack of an official datasheet is more than just an annoyance; it’s a hurdle for capacity planning and stakeholder management.

[TOC]

The Real Problem: Everyone Wants a Simple Number

As engineers, we thrive on hard specifications. We want “the number” so we can compare GPT-5 against GPT-3 or GPT-4, estimate inference latency, and justify infrastructure budgets to stakeholders. However, searching for how many parameters does gpt-5 have usually leads to a rabbit hole of conflicting claims.

The credibility risk here is real. If you walk into a boardroom with a slide claiming a flat “5 trillion parameters,” you risk looking uninformed if a technically savvy peer asks whether you’re referring to dense-equivalent weights or a Mixture-of-Experts (MoE) total. Since OpenAI has not published an official count, any single number presented as “fact” is essentially a guess.

Why This Matters for Practitioners

Parameter myths aren’t just academic; they affect real engineering decisions. Overestimating the model size might lead you to over-budget for compute or unnecessarily rule out certain real-time applications. Conversely, underestimating the complexity of the architecture could lead to over-promising on how “lean” your integration will be. We need to reframe the question: it’s not just about the exact count, but rather: how big is GPT-5 in practice and how should I talk about it?

Agitation – Why GPT-5 Parameter Numbers Are All Over the Place

If you’re asking how many parameters does gpt-5 have, you’ve likely seen figures ranging from 1.5 trillion to over 50 trillion. Here is why the data is so fragmented.

No Official Number From OpenAI

OpenAI has shifted its communication strategy. While the GPT-3 era was defined by the “175B” headline, the company now focuses almost exclusively on capabilities, safety benchmarks, and system behavior. They have intentionally left the parameter vacuum empty, which third-party blogs and “leakers” fill with back-of-the-envelope scaling law estimates that eventually get repeated as gospel.

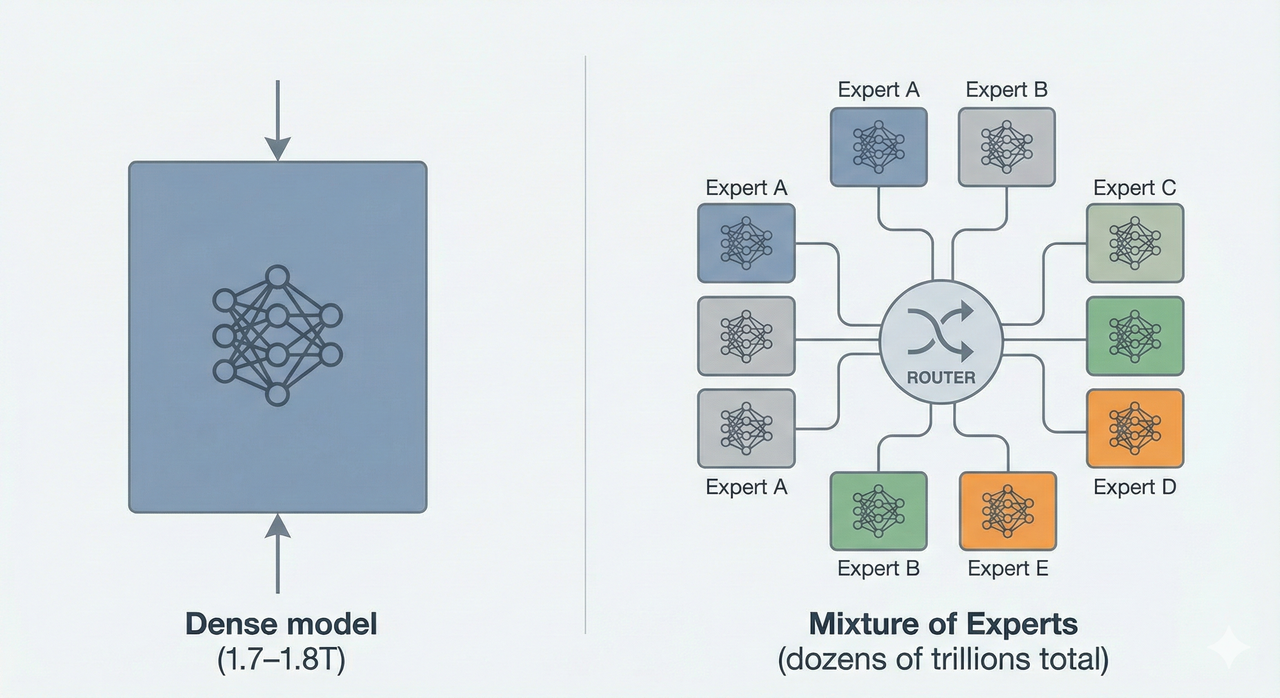

Dense vs. Mixture-of-Experts: Two Ways to Count

This is the primary source of confusion.

- Dense Models: In a traditional dense transformer, every weight is active for every token. The parameter count is straightforward.

- Mixture-of-Experts (MoE): This architecture uses a “router” to send a token to only a few specialized “expert” subnetworks.

When people ask how many parameters does gpt-5 have, one source might quote the total stored parameters (which could be in the tens of trillions), while another quotes the active parameters per token (the “dense-equivalent” size). Both are technically “correct” depending on your definition, but they describe very different hardware requirements.

Multiple GPT-5 Variants, Not Just One Model

It is highly likely that “GPT-5” isn’t one monolithic model. Like its predecessors, it likely exists in tiers—perhaps a “High” tier for complex reasoning and “Mini” tiers for low-latency tasks. Articles focusing on different tiers will naturally report wildly different parameter counts.

Solution – A Clear, Honest Way to Talk About GPT-5’s Size

So, how do you handle this in a professional environment?

The One-Sentence Answer You Can Put on a Slide

If you need to summarize the situation for an executive or a client, use this:

“While OpenAI hasn’t published an official count, industry analysis suggests GPT-5 operates at a low-trillion dense-equivalent scale, likely utilizing a Mixture-of-Experts (MoE) architecture with total capacity in the tens of trillions, though only a fraction of those are active per token.”

A Practical Mental Model for GPT-5 Parameters

Think of GPT-5 as being roughly an order of magnitude larger than GPT-3 in terms of raw capability, but far more efficient than a simple “bigger” model would be. Distinguish between the storage footprint (the total MoE parameters you need to keep in memory) and the compute cost (the active parameters that actually “fire” during an inference call).

Deep Dive – Where Current Estimates Come From

If you want to look under the hood of these estimates, here is where the “leaked” and “calculated” numbers for how many parameters does gpt-5 have actually originate.

The Low-Trillion Dense-Equivalent Estimates (≈1.7–1.8T)

By extrapolating from GPT-4’s rumored size and applying standard industry scaling laws (the ratio of compute-to-data-to-parameters), many researchers land on a figure around 1.7T to 1.8T parameters. This is the “dense-equivalent” core—the size the model would be if it didn’t use the MoE trick.

The “Dozens of Trillions” MoE Story

More aggressive estimates posit a massive MoE configuration, potentially totaling 50T to 52T parameters. While this sounds astronomical, remember that in an MoE setup, the model might only use 1T parameters for any given word it generates. This makes the model “wide” (huge knowledge base) but “narrow” (fast per-token processing).

How This Affects Real-World Engineering Decisions

Capacity Planning and Cost Expectations

When you ask how many parameters does gpt-5 have, your real concern is likely: Can I afford to run this? Parameter scale translates directly to GPU memory (VRAM) requirements. Even if only a few parameters are active, the entire model must usually be accessible in memory for high-performance serving. Expect GPT-5 class models to require significant H100/B200 clusters, but look for vendor optimizations that specifically target MoE routing to save on actual API costs.

Model Selection and Trade-offs

Understanding the tiers helps you choose.

- Max Quality: Use the “High” variant (highest parameter count).

- Cost-Optimized: Use the “Mini” or “Turbo” variants, which likely have significantly fewer parameters and lower latency.

Quick FAQ for Stakeholder Conversations

Q: Is GPT-5 bigger than GPT-4?

A: Almost certainly. However, it is likely “smarter” due to architectural efficiency (MoE) rather than just having more raw weights.

Q: Does more parameters always mean better performance?

A: No. Data quality, training duration, and “reasoning” architectures (like those found in the o1 series) often matter more than the raw count when answering how many parameters does gpt-5 have.

Q: Why won’t OpenAI publish the exact number?

A: It is a competitive advantage. Additionally, as models move toward “systems of models,” a single number becomes technically misleading.

Q: Can we trust third-party estimates?

A: Treat them as ballparks. Always label these figures as “estimates” in your own documentation to maintain technical credibility.

Recommended Phrasing for Different Audiences

- For Executives: “GPT-5 is an order of magnitude more complex than previous generations, but uses smarter routing to keep costs manageable.”

- For Product Managers: “We should budget for GPT-5 as a low-trillion scale model, but wait for official tokens-per-dollar benchmarks to finalize our ROI.”

- For Technical Peers: “It’s likely a sparse MoE model. We’re looking at a 1.8T dense-equivalent with a much larger total parameter footprint—likely 20T+—distributed across experts.”

Would you like me to create a comparison table of the most common GPT-5 parameter estimates alongside their sources?

Leave a Reply