How to Become an AI Prompt Engineer: From Hobbyist to High-Paid Pro

Table of Contents

Let’s be honest for a second. It feels like everyone with a ChatGPT Plus subscription suddenly has “AI Expert” in their bio. You’ve seen the posts on LinkedIn: “I asked AI to write me a poem about coffee, and look at the result!”

But deep down, you know there’s a massive gap between getting a chatbot to write a funny limerick and building a reliable, enterprise-grade AI system. You might be feeling a mix of Imposter Syndrome (“Am I really technical enough for this?”) and FOMO (“Is this career path already saturated?”).

Here is the truth: The market is flooded with “prompters,” but it is starving for engineers.

If you want to know how to become an AI prompt engineer—the kind who gets hired by tech companies to solve expensive problems—you need to stop treating this like creative writing and start treating it like a science.

In this guide, I’m going to walk you through the transition from “AI Hobbyist” to “AI Engineer.” We are going to look at the raw technical primitives, structured outputs, and the portfolio strategies that actually prove your value.

The “Bleeding Neck” Problem: Why “Chatting” Isn’t Enough

The biggest misconception about how to become an AI prompt engineer is that you just need to be good at English. While clear communication helps, businesses don’t pay six figures for someone who can just talk to a bot.

They pay for reliability.

- A hobbyist prompts an AI and says, “Wow, that’s cool.”

- An engineer prompts an AI and asks, “Will this work 1,000 times in a row without hallucinating?”

To bridge this gap, you must master “LLM Ops.” This is the discipline of controlling the AI’s randomness, enforcing specific formats (like JSON), and optimizing for cost (tokens). When a company builds a customer service bot, they cannot afford for it to go rogue. They need an engineer who can constrain the model so tightly that it behaves like a piece of software, not a creative writing partner.

Phase 1: Master the “Primitives” (The Dashboard Behind the Chat)

Most people only ever see the chat box. To stand out, you need to understand what’s happening under the hood. When you use the OpenAI API or advanced playgrounds, you get access to “hyperparameters”—the knobs and dials that control the AI’s brain.

1. Temperature & Top-P

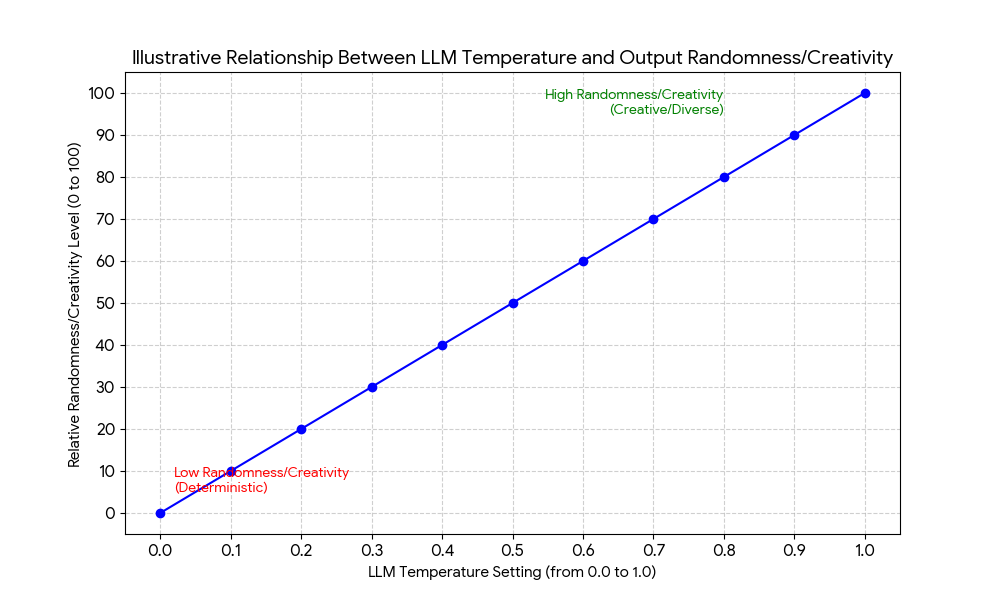

These control creativity versus determinism.

- Temperature (0.0 – 2.0): This controls the randomness of the output. A low temperature (e.g., 0.2) makes the model focused, deterministic, and repetitive—perfect for code generation or data extraction. A high temperature (e.g., 0.8) makes it creative and random—better for brainstorming or poetry.

- Top-P (Nucleus Sampling): This is a different method of sampling the next token. It cuts off the “long tail” of unlikely words.

In my testing, never change both Temperature and Top-P at the same time. Pick one variable to tune. For reliable business logic, start with Temperature = 0.

2. The Context Window

The “Context Window” is the model’s short-term memory. It is finite (measured in tokens). If you feed the model a 50-page PDF and the window is too small, the AI “forgets” the beginning.

A real engineer knows how to calculate token usage to ensure critical instructions aren’t dropped. You must learn to be ruthless with your words—every token costs money and memory.

3. System vs. User vs. Assistant Roles

In a chat interface, everything looks like a “message.” In the API, messages have specific roles that drastically change how the AI behaves:

- System: The “God Mode” instruction. It sets the behavior, tone, and constraints (e.g., “You are a helpful coding assistant that only outputs Python”).

- User: The specific request from the human.

- Assistant: The AI’s reply. In “Few-Shot” prompting, you can fake an Assistant message to show the AI how it should have replied in the past, effectively “pre-filling” its brain with the correct pattern.

Phase 2: Structural Patterns (The Engineering Edge)

Learning how to become an AI prompt engineer means moving beyond “Ask and Answer.” You need to implement reasoning strategies that force the model to think before it speaks.

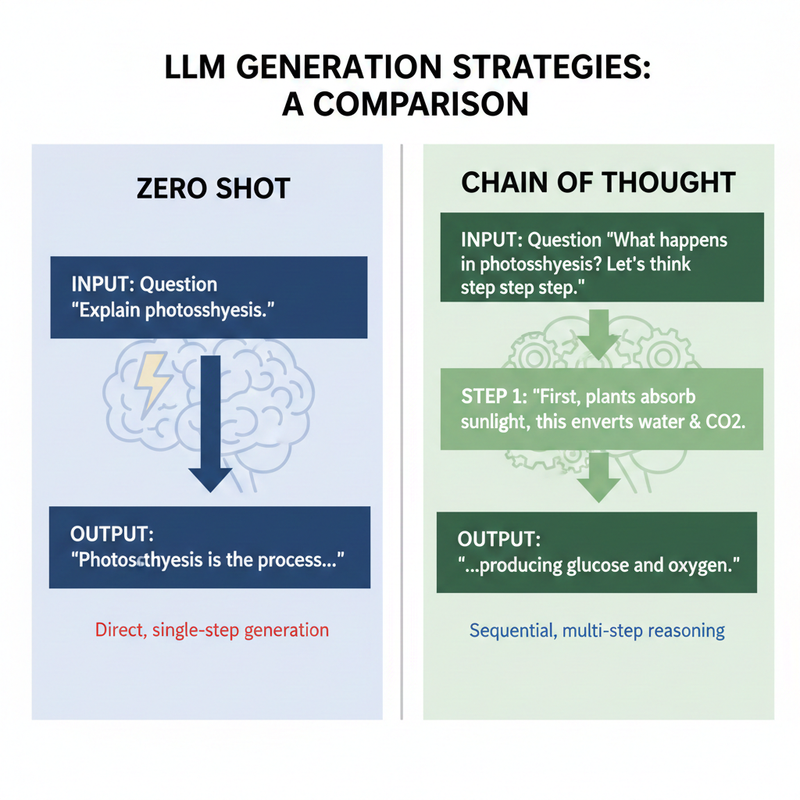

Chain-of-Thought (CoT)

Instead of asking for an answer, you ask for the steps. LLMs are terrible at mental math but decent at step-by-step logic.

- Standard Prompt: “How many tennis balls fit in a bus?” (Result: The AI guesses a random number).

- CoT Prompt: “Calculate how many tennis balls fit in a bus. Think step-by-step. First, estimate the volume of a bus. Second, estimate the volume of a tennis ball. Third, account for packing density.”

Step 1 -> Step 2 -> Output (Chain of Thought)”>

Step 1 -> Step 2 -> Output (Chain of Thought)”>

Few-Shot Prompting

This is the single most powerful tool in your kit. Instead of describing what you want, you show examples.

If a prompt fails more than 20% of the time, stop tweaking the instructions and add 3 clear examples (shots) of the desired input and output.

ReAct (Reasoning + Acting)

This is advanced territory often used in AI Agents. You prompt the model to follow a loop:

- Thought: Analyze the user’s request.

- Action: Decide to use a tool (like a calculator or a Google Search).

- Observation: Read the result of that tool.

- Final Answer: Synthesize the data.

Phase 3: The “Technical” Truth (Structured Outputs)

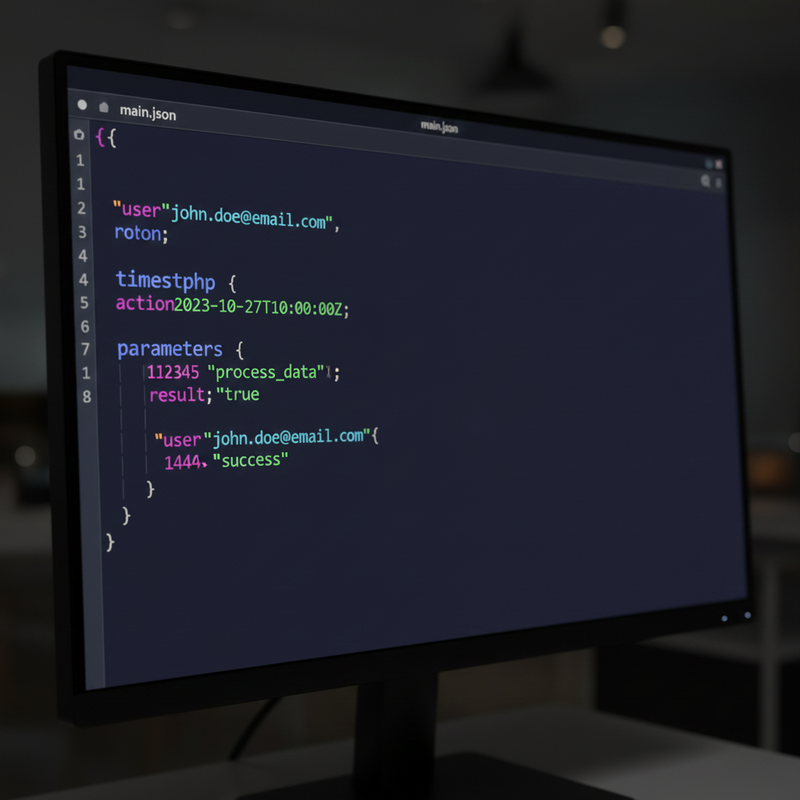

This is where the money is. A business cannot run code on a poem. They need data they can put into a database.

If you are figuring out how to become an AI prompt engineer, you must learn to prompt for Structured Outputs—specifically JSON or XML. This allows developers to parse the AI’s response reliably.

The Bad vs. The Good

Let’s look at a concrete example of how a hobbyist prompts versus how an engineer prompts.

| Feature | The Hobbyist Prompt | The Engineer Prompt |

|---|---|---|

| The Ask | “Write a product description for these running shoes.” | “Act as a Senior Copywriter. Write a description for [Product] targeting [Persona].” |

| Constraints | None (Vague) | “Constraints: Active voice, no jargon, max 150 words.” |

| Output Format | Plain Text paragraphs | “Output strictly in JSON with keys: headline, body, feature_list.” |

| Result | A wall of text that varies every time. | A data object that a website can automatically render. |

The Good Prompt Example (JSON Mode):

SYSTEM: You are a data extraction assistant.

USER: Analyze the text below and extract the user's name and intent.

Output strictly in JSON format.

{

"name": "string",

"intent": "purchase" | "support" | "inquiry",

"confidence_score": "number (0-1)"

}

This reliability is what separates the pros from the amateurs. When you can guarantee the format, you enable the entire engineering team to build on top of your work.

Phase 4: Building Your “Before & After” Portfolio

When you apply for jobs, do not just send a link to a ChatGPT conversation. Recruiters don’t care that you can generate text; they care that you can solve problems.

Your portfolio needs to tell a story of optimization.

The “Case Study” Structure

For every project in your portfolio, follow this format:

- The Objective: “The client needed to classify 10,000 support tickets automatically to route them to the right department.”

- The Baseline (The Failure): Show the initial “Bad Prompt” and the error rate (e.g., “The AI hallucinated categories 40% of the time and missed urgent tickets”).

- The Iteration: Explain the technique you applied (e.g., “I implemented Few-Shot prompting with 5 examples of edge cases and lowered the Temperature to 0.1”).

- The Result: “Accuracy improved to 98%, and by optimizing the system prompt, I reduced token costs by 20%.”

This proves you understand the business value of AI, not just the novelty. It shows you know how to become an AI prompt engineer who saves the company money.

Conclusion: Your Next Step

Learning how to become an AI prompt engineer is a journey from “magic spell caster” to “systems architect.” It requires you to respect the technology enough to learn its limitations, its cost structures, and its language (which, increasingly, is JSON).

Don’t let the technical terms scare you. You don’t need to be a full-stack developer to start, but you do need to adopt an engineering mindset. Start testing, start measuring, and start building systems that work reliably.

Ice Gan’s Recommended Next Step: Open a playground (like OpenAI’s Platform or Anthropic’s Console). Take a simple task you do every day (like summarizing emails) and try to write a System Prompt that outputs the summary in a strictly formatted JSON block. Once you can get it to work 10 times in a row without breaking, you are officially on the path.

Check out my guide on Advanced Python for Prompt Engineering to take this to the code level!

Leave a Reply